What is caching, benefits of caching in web applications?

Caching is process of storing copies of files in cache, or RAM so that they can be accessed more quickly. Accessing data from cache is always faster than accessing it directly from database.

Here are some benefits of using caching:

- Cache is faster because it uses main memory, instead of database which uses hard-drive. Accessing data from cache makes application response faster.

- Caching Intercept all the requests before they go to the database, hence reduces the number of requests the database is hit with. This improves the overall performance of the Application.

- Write operations directly in the database is generally costly, we can write data with less priority in the cache. This data can be updated in the database with help of periodic batch operations. This helps to bring down the overall costs of the application.

What is Distributed Caching? Why it is needed?

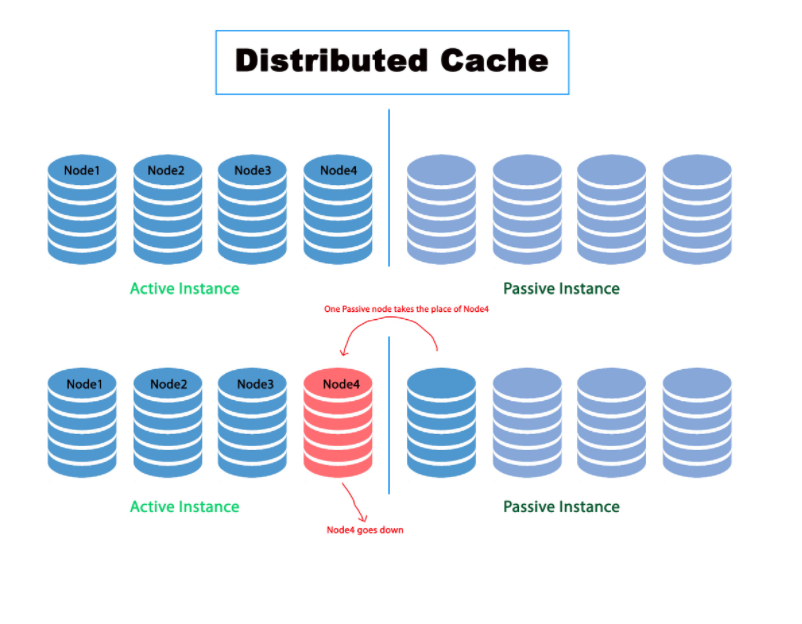

A Distributed cache is a system that creates a cluster of random-access memory(RAM) of multiple networked computers to work as a single in-memory data store cache to provide fast access to data.

Why do we Need Distributed Cache System?

Distributed caching helps meet application today’s increasingly demanding transaction performance requirements, which is not possible in traditional caching.

- Distributed caching lets you load balance web traffic over several application servers and not lose session data in case any application server fails.

- By caching data in multiple places in your network, including on the same computers as your application, network traffic can be reduced and increasing bandwidth available for other applications that depend on the network.

- Distributed caching is being mostly used in the industry today, for having the potential to scale on demand & being highly available.

- Scalability, High Availability, Fault-tolerance are crucial to the large-scale services running online today.

- Many online businesses need to keep their services online all the time. Think about health services, stock markets, military. They have no scope for going down. They are distributed across multiple nodes with a pretty solid amount of redundancy.

How distributed caching is different from local caching.

Distributed cache is specificly designed to enable system to quickly and easily scale it self be it storage, computing or anything.

The local cache is hosted on a limited number of instances. It’s difficult to scale quickly. Its availability and fault-tolerant capacity is inferior compared to the distributed cache design.

How distributed caching works?

Distributed cache is basically is a Distributed Hash Table which has a responsibility of mapping Object values to Keys spread across multiple nodes.

All major operation like addition, deletion, failure of nodes are continuously managed using a hash table as long as the cahe service is online.

Cache evict data on the basis of LRU( Least Recently Used Policy).Basically it uses a Doubly-Linked-List to manage the data pointers, which is the most important part of the data-structure.

Limitations of Distributed Cache:

While there are many benefits of using a distributed cache, the main downside is the cost of RAM. Since RAM costs are significantly higher than disk or SSD costs, in-memory speed is not easily available to everyone.

Businesses that use large distributed caches can typically justify the hardware expense through quantifiable gains of having a faster system. But Since RAM is becoming more and more affordable in costs, in-memory processing is becoming more mainstream for all businesses.

Popular Distributed Caching Systems:

Memcache is most popular cache which is used by Google Cloud in its Platform As A Service.

Redis another popular system that is an open-source in-memory distributed system. It supports other data structures too such as distributed lists, queues, strings, sets, sorted sets. Besides caching, Redis is also often treated as a NoSQL data store.